jar546

CBO

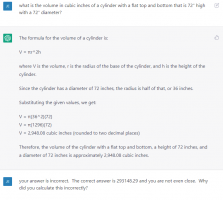

So last night, I spent a solid 1/2 hour going back and forth with ChatGPT because it could not execute a simple formula. It showed the correct formula but could not execute the formula. I simply asked it to give me the volume of a cylinder and provided the dimensions. I then asked it to convert the volume they chose to gallons and could not get consistent answers. Even when I pointed out the problem, ChatGPT acknowledged it made a mistake several times, and changed the way it answered but still answered incorrectly. This happened about 10 times before I gave up and realized it could not learn from its mistakes, even when the mistakes were pointed out and then acknowledged.

I asked for the volume of a cylinder that had a 72" diameter and was 72" high. It knew the formula but decided to convert the inches to feet which is OK. The problem was that they continued to miscalculate a correct formula.

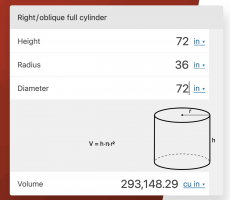

Before I continue this thread, let's all agree that the volume of this cylinder is: 293,148.29 cubic inches using V=h*pi*r2

Do you agree with that number?

I am just confirming that ChatGPT may have its uses but the level of inaccurate information I've found so far is staggering, including building code questions.

I asked for the volume of a cylinder that had a 72" diameter and was 72" high. It knew the formula but decided to convert the inches to feet which is OK. The problem was that they continued to miscalculate a correct formula.

Before I continue this thread, let's all agree that the volume of this cylinder is: 293,148.29 cubic inches using V=h*pi*r2

Do you agree with that number?

I am just confirming that ChatGPT may have its uses but the level of inaccurate information I've found so far is staggering, including building code questions.

Last edited: