jar546

CBO

It looks like AI bots are starting to crawl us for relevant information to help build their models. We are slightly more relevant, I suppose.

Your premier resource for building code knowledge.

This forum remains free to the public thanks to the generous support of our Sawhorse Members and Corporate Sponsors. Their contributions help keep this community thriving and accessible.

Want enhanced access to expert discussions and exclusive features? Learn more about the benefits here.

Ready to upgrade? Log in and upgrade now.

The site is routinely crawled by bots every second of the day, mostly search engines, which help us to be found. Having AI bots crawl us for information, specifically the content, only shows that we are relevant enough to retrieve data.I don't know if that's good news or bad news.

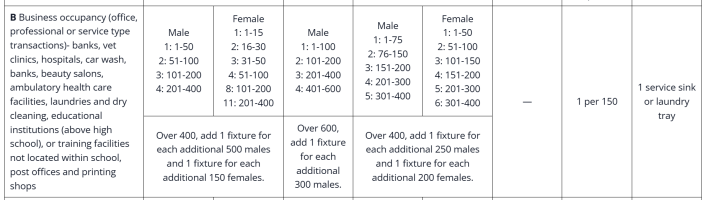

AI is full of problems right now, and any time you use it, you have to verify.I’ve experimented with them. So far they are unable to distinguish between regional differences, older vs. newer versions of codes or how to scope for a particular project.

It could probably be generally reliable for IRC single-family residential that has no unique energy codes or form-based zoning codes.

The fact that we can debate code issues into our 2nd decade here makes it unlikely that LLM / AI models will deliver consistently reliable info in the very near future.

As an ABET-accredited computer engineer and software developer, I can say that three factors critically affect the quality of AI outputs:AI is full of problems right now, and any time you use it, you have to verify.

Lord knows for many years it stood for Anthropogenic Idiocy...AI can stand for Artificial Idiocy as well as Artificial Intelligence.

I have a coworker that used ChatGPT for figuring out what the code requirements are for [insert literally anything]. He's partially or completely wrong most of the time.The one thing that I've noticed is that AI models are extremely unreliable and often wrong, even when you copy and paste something. Everything needs to be proofread first. I've also noticed that once it starts making a mistake and then the correction is wrong, it's over. It will never get better and then get worse. I just stopped after two correction attempts.

Pretty sure that's partially caused by people's loneliness and social isolation that the pandemic accelerated. Lock a bunch of people in their homes for months or years, make them only interact with people over zoom, you end up with a bunch of socially awkward kids and young adults who are way to reliant on technology for everything. I read something recently that AI being constantly nice and never critical is also a driving factor for this (what Yikes posted about). Constant validation (or at least no criticism) is very strong for the depressed generation.I just heard a story today that a surprising percentage of young people would be willing to marry a non-human entity (I couldn't brink myself to listen to the entire thing, but they were speaking of A/I). Maybe it is the shine-on Yikes posted about. Maybe its because human intelligence is no less artificial than artificial intelligence.

I don't doubt that at all. If you are an expert in your field, you really see how inaccurate AI is.I have a coworker that used ChatGPT for figuring out what the code requirements are for [insert literally anything]. He's partially or completely wrong most of the time.

You're being generous. Basic reading and critical thinking is all you really need to figure out it's wrong.I don't doubt that at all. If you are an expert in your field, you really see how inaccurate AI is.

- Data Quality: "Garbage in, garbage out" holds true. Even the best models will fail if they're trained or prompted with biased, incomplete, or low-quality data.